Our main mathematical tool to model alpine ski is known as isogeometric analysis (IGA), which is basically an extension of the finite element method (FEM) using non-uniform rational B-splines (NURBS) as basis functions. Being introduced in 2005 by Hughes et al., followed by a book in 2009, IGA tries to bridge the gap between finite element analysis and CAD design tools. The important future of IGA is that it uses the same basis as CAD software for describing the given geometry, and thus exact representation of the model is possible. It is therefore natural to include a section considering this basis in the beginning before we set up the IGA. Note that only the relevant parts of the IGA will be presented, and even here we shall be brief. The text assumes the reader is somewhat familiar which the finite element method.

The underlying assumption for our analysis is linear elasticity. The resulting equations will be the fundamental equations to which we apply IGA. The notation will be presented and the complete partial differential equation will be presented.

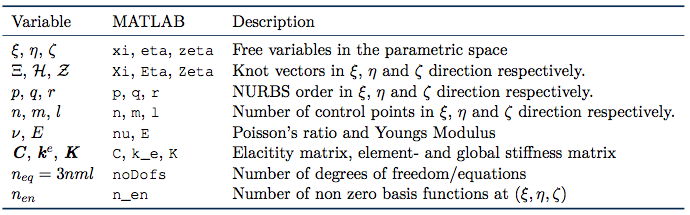

Initially the IGA implementation was entirely based on the library given in the article “Isogeometric analysis: an overview and computer implementation aspects”. This library have implemented NURBS calculations in C, and have a lot of other artillery which we will not need. We have rewritten all code implemented in C into MATLAB, and many more adjustments have been done, even some optimalizations concerning how the sparse global stiffness matrix is built. In this regard it must be noted that the original code written in C is faster then the corresponding rewritten code in MATLAB. It was not necessary to rewrite all parts of the library, so only the parts which are edited will be presented in whats follows. For the remaining part of the code, we refer to the article “Isogeometric analysis: an overview and computer implementation aspects”. When presenting the theory, we shall consecutively present the corresponding implementation in MATLAB. In this regard we present explanations for the most important variables used, and writes the corresponding variable names used in MATLAB, in the table below.

NURBS

The NURBS basis is built by B-splines. So an understanding of B-splines is crucial to understanding NURBS. Let ![]() be the polynomial order, let

be the polynomial order, let ![]() be the number of functions and let

be the number of functions and let ![]() be an ordered vector with non decreasing elements (referred to as a knot vector). Then, the B-spline

be an ordered vector with non decreasing elements (referred to as a knot vector). Then, the B-spline ![]() are recursively defined by (for

are recursively defined by (for ![]() )

)

(1)

and for ![]() we have

we have

![]()

This forumla is referred to as Cox-deBoor formula, and the derivative of a spline may be computed by

![]()

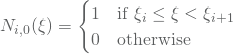

Throughout the project we shall use open knot vectors. That is, the first and last element in the vector is repeated ![]() times. Moreover, we shall use normalized knot vectors, which simply spans from 0 to 1. We shall build upon this information to optimize algorithms. When we want to evaluate a B-spline at a fixed

times. Moreover, we shall use normalized knot vectors, which simply spans from 0 to 1. We shall build upon this information to optimize algorithms. When we want to evaluate a B-spline at a fixed ![]() it is important to note that it is very redundant to evaluate all

it is important to note that it is very redundant to evaluate all ![]() basis function. This is due to the small support of each function. In fact, it turns out that only (at most)

basis function. This is due to the small support of each function. In fact, it turns out that only (at most) ![]() basis functions are non-zero at

basis functions are non-zero at ![]() . It is important to then only use these function to have an efficient code. One typically then implements a function which find the span corresponding to a given

. It is important to then only use these function to have an efficient code. One typically then implements a function which find the span corresponding to a given ![]() . Due to the ordering of the knot vector, we may use a binary search algorithm for finding this span. The span will be defined by the index

. Due to the ordering of the knot vector, we may use a binary search algorithm for finding this span. The span will be defined by the index ![]() corresponding to the first basis function which is non-zero at

corresponding to the first basis function which is non-zero at ![]() . The following listing represents the algorithm called findKnotSpan.m:

. The following listing represents the algorithm called findKnotSpan.m:

As an example, if ![]() and

and ![]() and we want to evaluate a B-spline with the knot vector

and we want to evaluate a B-spline with the knot vector ![]() , we get the index

, we get the index ![]() if

if ![]() ,

, ![]() if

if ![]() and

and ![]() if

if ![]() or

or ![]() .

.

We are now ready to implmenet a program which evaluates B-splines using the previous routine. When the recursion formula is used to evaluate the ![]() functions which are non-zero at

functions which are non-zero at ![]() , the function

, the function ![]() is the only function of order zero which is non-zero at

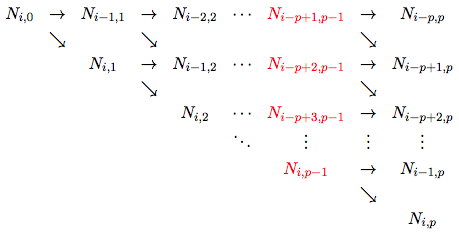

is the only function of order zero which is non-zero at ![]() . Everything is thus build from this function. We then have the following graph

. Everything is thus build from this function. We then have the following graph

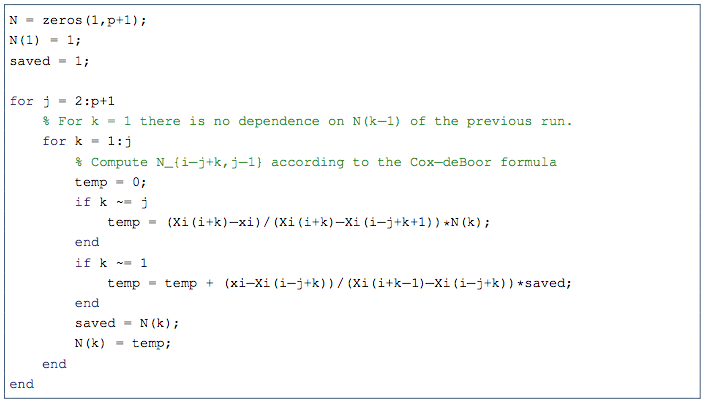

where the values colored red is used to calculate the nonzero derivatives at the same ![]() . It is then clear that we need two loops. The outer loop should iterate over the columns of this graph and the inner loop should iterate over the rows. The output of the function Bspline_basis is simply an array N which contains the

. It is then clear that we need two loops. The outer loop should iterate over the columns of this graph and the inner loop should iterate over the rows. The output of the function Bspline_basis is simply an array N which contains the ![]() functions which are evaluated at

functions which are evaluated at ![]() . To save memory one should also store the intermediate values

. To save memory one should also store the intermediate values ![]() in this same array. Thus, we need to store N(k-1) to compute N(k) (which we store in the variable saved). Here,

in this same array. Thus, we need to store N(k-1) to compute N(k) (which we store in the variable saved). Here, ![]() is the loop index of the outer loop, while

is the loop index of the outer loop, while ![]() is the loop index of the inner loop. The first iteration (when

is the loop index of the inner loop. The first iteration (when ![]() ) should be done seperately with 1. Finally, we use the convention that

) should be done seperately with 1. Finally, we use the convention that ![]() .

.

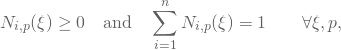

A way to veryfy this implementation is to check that the nonegativity and the partion of unity holds,

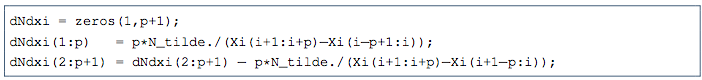

which is important properties of B-splines. We will finally need to compute the corresponding derivatives. Once again, only ![]() derivatives will be non zero at

derivatives will be non zero at ![]() . Let N_tilde be an array containing the red values in the previous graph. We may then compute these as in the following listing

. Let N_tilde be an array containing the red values in the previous graph. We may then compute these as in the following listing

Since we only need these derivatives also when the corresponding values for the basis function is needed, we extend the function Bspline_basis into a new function called Bspline_basisDers. We then avoid redundant calculations.

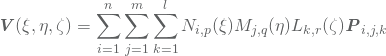

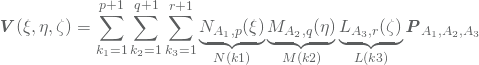

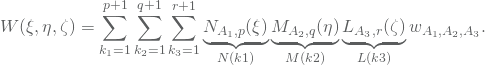

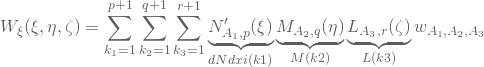

A B-spline volume may now be represented by

(2)

where ![]() contains the

contains the ![]() controlpoint of the volume. For a given

controlpoint of the volume. For a given ![]() , this formula does a lot of redundant computations as discussed so far. Namely, given a

, this formula does a lot of redundant computations as discussed so far. Namely, given a ![]() , only

, only ![]() basis functions will contribute. If these nonzero basis functions evaluated at a given point

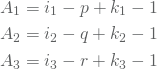

basis functions will contribute. If these nonzero basis functions evaluated at a given point ![]() are stored in M, N and L, we may rewrite 2 as

are stored in M, N and L, we may rewrite 2 as

where

and ![]() ,

, ![]() and

and ![]() are knot span index corresponding to

are knot span index corresponding to ![]() ,

, ![]() and

and ![]() , respectively. This observation will be used frequently when dealing with corresponding sums in the NURBS basis.

, respectively. This observation will be used frequently when dealing with corresponding sums in the NURBS basis.

Given a set of weights ![]() corresponding to each basis function the NURBS basis functions in 3D may be expressed by

corresponding to each basis function the NURBS basis functions in 3D may be expressed by

(3) ![]()

where

The partial derivatives of these functions is then given by the quotient rule

(4) ![]()

where

and

![]()

Similar expressions may be found for the partial derivatives with respect to ![]() and

and ![]() . We may now insert all of this into a routine which computes the nonzero basis functions at a given point

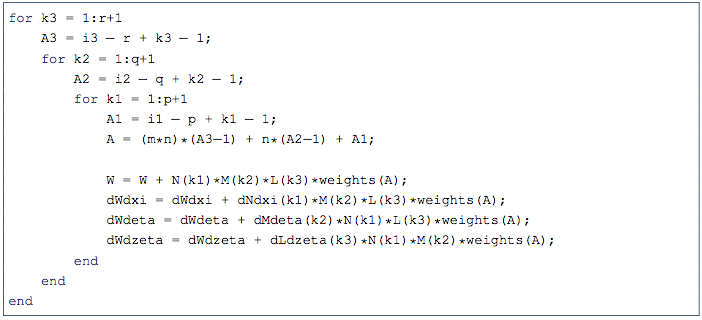

. We may now insert all of this into a routine which computes the nonzero basis functions at a given point ![]() and the corresponding nonzero derivatives. This function is called NURBS3DBasisDers. After finding the nonzero B-spline functions and their corresponding derivatives as discussed above, it continues by first finding the function

and the corresponding nonzero derivatives. This function is called NURBS3DBasisDers. After finding the nonzero B-spline functions and their corresponding derivatives as discussed above, it continues by first finding the function ![]() and it’s corresponding derivatives. This is illustrated in the following listing.

and it’s corresponding derivatives. This is illustrated in the following listing.

Note the use of the index A which is convenient when weights is located in an long array (which is the format we shall use when doing the IGA analysis). Finally, the function can now compute the NURBS basis alongside the corresponding derivatives as shown in the following listing.

Note the use of the intermediate variables fac and NML which is used to avoid redundant computations. The index a loops over the ![]() nonzero functions.

nonzero functions.

Linear elasticity

One important assumption for using linear elasticity is that only small deformations of the material occurs. This is due to the linearized quantities. We shall here not go in detail on how to derive the needed equations from Hooke’s law, but we present the notation used in the formulation of the isogeometric analysis.

The notations used, takes inspirations from the book by Hughes. In this section, the indices ![]() ,

, ![]() ,

, ![]() and

and ![]() will denote a specific spatial direction. We shall model everything in three dimensions, such that

will denote a specific spatial direction. We shall model everything in three dimensions, such that ![]() . Moreover,

. Moreover, ![]() shall denote the

shall denote the ![]() component of the vector

component of the vector ![]() and differentiation is denoted with a comma such that

and differentiation is denoted with a comma such that

![]()

Finally, we use the convention that if an index is repeated, it imply summation. That is,

![]()

and

![]()

Note that we do not sum over ![]() in the latter example since the quantities are separated by a plus sign. Define now the symmetric part of a general tensor

in the latter example since the quantities are separated by a plus sign. Define now the symmetric part of a general tensor ![]() to be

to be

![]()

and note that if ![]() is a symmetric tensor, then

is a symmetric tensor, then

(5) ![]()

That is, we can combine the components of ![]() which are equal to reduce redundant computations.

which are equal to reduce redundant computations.

Let now ![]() denote the Cartesian components of the Cauchy stress tensor and let

denote the Cartesian components of the Cauchy stress tensor and let ![]() denote the infinitesimal strain tensor which is defined by

denote the infinitesimal strain tensor which is defined by

![]()

We can now state the relation between ![]() as

as ![]() using the generalized Hooke’s law as

using the generalized Hooke’s law as

![]()

where ![]() are elastic coefficients. In the case of isotropic material, these coefficients are given by

are elastic coefficients. In the case of isotropic material, these coefficients are given by

![]()

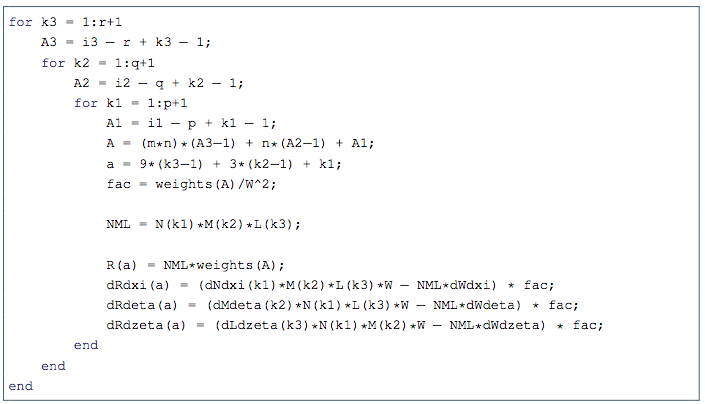

where the Kronecker delta function is given by

and the parameters ![]() and

and ![]() are Young’s modulus and Poisson’s ratio respectively. We are now ready to state the strong form of the linear elasticity problem in three dimensions.

are Young’s modulus and Poisson’s ratio respectively. We are now ready to state the strong form of the linear elasticity problem in three dimensions.

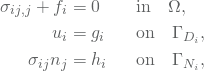

Let ![]() be the domain with a boundary

be the domain with a boundary ![]() which is composed of two parts;

which is composed of two parts; ![]() and

and ![]() . These are called Dirichlet and Neumann boundary conditions, respectively, and satisfies

. These are called Dirichlet and Neumann boundary conditions, respectively, and satisfies ![]() and

and ![]() . Moreover, let the functions

. Moreover, let the functions ![]() ,

, ![]() and

and ![]() be given. Then, find

be given. Then, find ![]() such that

such that

(6)

for ![]() . In this project, we will only consider boundaries which are entirely Neumann boundaries or Dirichlet boundaries, respectively. That is,

. In this project, we will only consider boundaries which are entirely Neumann boundaries or Dirichlet boundaries, respectively. That is, ![]() and

and ![]() for all

for all ![]() .

.

In this project, we neglect any body forces such as gravity, such that ![]() . Moreover, we shall only need homogeneous Dirichlet conditions such that

. Moreover, we shall only need homogeneous Dirichlet conditions such that ![]() .

.

The weak form and Galerkin’s Method

The weak form of the problem is derived from the strong form. Typically, one defines two classes of functions: ![]() denotes the solution space and

denotes the solution space and ![]() denotes the weighting space for a given spatial component

denotes the weighting space for a given spatial component ![]() . These spaces are made in order to handle non homogeneous Dirichlet boundary conditions, but since we have homogeneous Dirichlet boundary conditions, these spaces will be the same. That is,

. These spaces are made in order to handle non homogeneous Dirichlet boundary conditions, but since we have homogeneous Dirichlet boundary conditions, these spaces will be the same. That is, ![]() for

for ![]() . Typically,

. Typically, ![]() is a subspace of the Sobolev space

is a subspace of the Sobolev space ![]() (which consist of all functions which have square-integrable derivatives) with a condition such that the Dirichlet boundary condition is satisfied.

(which consist of all functions which have square-integrable derivatives) with a condition such that the Dirichlet boundary condition is satisfied.

We now multiply each of the equations in 6 by a corresponding test functions ![]() and sum these three equations into one single equation given by

and sum these three equations into one single equation given by

![]()

which can be written as

![]()

where

Integrating over the domain yields

(7) ![]()

Consider the divergence theorem

![]()

with ![]() . Note that

. Note that

![]()

which inserted in the divergence theorem yields

![]()

We may now insert this into 7 such that

(8) ![]()

Note that we only integrate over the Neumann part of the boundary since the integral over the vanishes (the test function ![]() is zero at this boundary). Also note that the domain of integration for the boundary integral is depending on

is zero at this boundary). Also note that the domain of integration for the boundary integral is depending on ![]() . Returning to the index summation convention we may rewrite 8 as

. Returning to the index summation convention we may rewrite 8 as

![]()

which using the boundary conditions, and the fact that ![]() for our case, may be written as

for our case, may be written as

![]()

Note that since ![]() is a symmetric tensor, we have used 5 to only write the symmetric part of

is a symmetric tensor, we have used 5 to only write the symmetric part of ![]() . If we now define the space

. If we now define the space ![]() we can state the weak formulation in a concise form: Find

we can state the weak formulation in a concise form: Find ![]() such that for all

such that for all ![]() we have

we have

![]()

where

(9) ![]()

and

![]()

Here we have used the relation ![]() .

.

We now want to transform this weak statement into a system of algebraic equations. We here apply Galerkin’s method and now turn to a finite-dimensional subspace ![]() . The basis for this subspace is the presented NURBS basis. But note that we will have vector valued control variables. The Galerkin approximation of the weak form is now given by: Find

. The basis for this subspace is the presented NURBS basis. But note that we will have vector valued control variables. The Galerkin approximation of the weak form is now given by: Find ![]() such that

such that

(10) ![]()

for all ![]() .

.

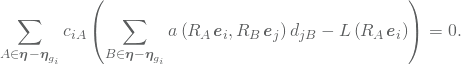

To find the system of algebraic equations we need to write ![]() as a linear combination of the basis functions. First, let

as a linear combination of the basis functions. First, let ![]() (where

(where ![]() is the number of basis functions) be the set containing the indices of all the functions in the NURBS basis defining the geometry and let

is the number of basis functions) be the set containing the indices of all the functions in the NURBS basis defining the geometry and let ![]() be the set containing the indices of all the basis functions that are non-zero on

be the set containing the indices of all the basis functions that are non-zero on ![]() . Due to the homogeneous Dirichlet boundary condition, we may write the

. Due to the homogeneous Dirichlet boundary condition, we may write the ![]() component of

component of ![]() and the

and the ![]() component of

component of ![]() as

as

(11) ![]()

respectively, where ![]() denotes set subtraction. Using the same index summation convention we may now write

denotes set subtraction. Using the same index summation convention we may now write

(12) ![]()

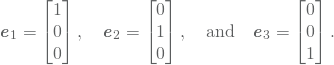

where the unit vectors ![]() are given by

are given by

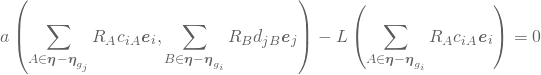

The finale step is now to insert 12 (using 11) into 10 such that we obtain a matrix formulation of the problem. Insertion yields

which using the bilinearity of ![]() and the linearity of

and the linearity of ![]() may be written as

may be written as

Since the coefficients ![]() is arbitrary (the relation should hold for all

is arbitrary (the relation should hold for all ![]() ) the term in the parentheses must vanish. That is, for all

) the term in the parentheses must vanish. That is, for all ![]() and

and ![]() we have

we have

![]()

One should typically make a system of the ordering of these equations. That is, one should create a function ![]() which collapse the indices

which collapse the indices ![]() and

and ![]() into a single index. A given equation then has the index

into a single index. A given equation then has the index ![]() and the index over all unknown components of the displacement vectors are called

and the index over all unknown components of the displacement vectors are called ![]() . The resulting system of equation may then be written as

. The resulting system of equation may then be written as

![]()

where

![]()

and

![]()

Assembly

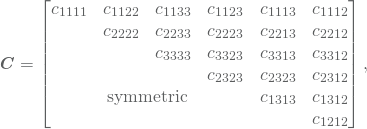

As for the finite element method, one typically do not loop through the basis functions. Rather, we loop through the elements constructing local stiffness matrices and successfully place their element in the global stiffness matrix. Let us first introduce some notations. The elastic coefficients are typically inserted in a matrix ![]() called the elasticity matrix. It is defined by

called the elasticity matrix. It is defined by

or in our case, more explicitly by

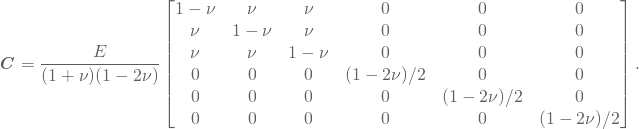

Moreover, define the strain vector and the stress vector to be

Then

![]()

such that we may write the bilinear form in 9 as

![]()

Also note that

![]()

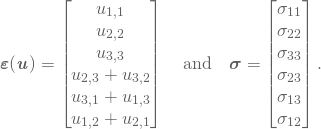

where

The entries in the global stiffness matrix may then be written as

![]()

Let ![]() be the domain of a given element, where the index

be the domain of a given element, where the index ![]() loops over all elements. The support of the NURBS are highly localized. To reduce computations, we should only integrate over functions which have support in

loops over all elements. The support of the NURBS are highly localized. To reduce computations, we should only integrate over functions which have support in ![]() . If we have

. If we have ![]() such \local shape functions, and let

such \local shape functions, and let ![]() and

and ![]() iterate over these functions, we may calculate the entries in the local stiffness matrix as

iterate over these functions, we may calculate the entries in the local stiffness matrix as

![]()

where

![]()

The local force vector may similarly be calculated by

(13) ![]()

The integration is done by quadrature formulas. One first maps to the parametric domain, and then map this domain to a parent domain. The element in the parametric domain, corresponding to ![]() , is given by

, is given by

![]()

In three dimension we want to map this domain into the parent domain given by

![]()

So given ![]() , we calculate

, we calculate ![]() by

by

![]()

![]()

![]()

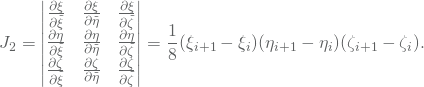

The Jacobian determinant for the parametric to parent mapping is thus given by

Similarly, we need the Jacobian for the mapping from the physical domain into the parametric domain. Given ![]() , we calculate

, we calculate ![]() by

by

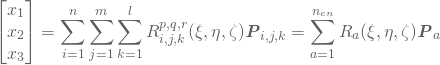

where ![]() are the control points and

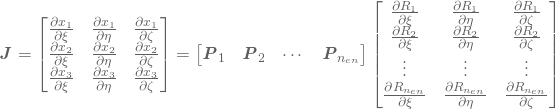

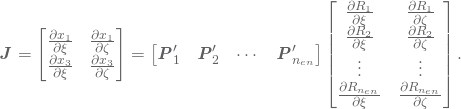

are the control points and ![]() are the NURBS basis functions which are computed by 3. Note that the last equality again comes from the highly localized support of the NURBS basis. The Jacobian matrix is thus given by

are the NURBS basis functions which are computed by 3. Note that the last equality again comes from the highly localized support of the NURBS basis. The Jacobian matrix is thus given by

such that the Jacobian determinant of this transformation is given by

![]()

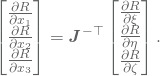

where the derivatives of the NURBS basis functions are computed by 4. The matrix ![]() contains derivatives of the NURBS functions w.r.t. physical coordinates. So we need to find expressions for

contains derivatives of the NURBS functions w.r.t. physical coordinates. So we need to find expressions for ![]() . By the chain rule we have

. By the chain rule we have

![]()

![]()

![]()

And thus, we may write

(14) ![]()

Multiplying with the inverse of the Jacobian, ![]() , from the right and taking the transpose on each side of the equation finally yields

, from the right and taking the transpose on each side of the equation finally yields

By successfully placing these expressions in the matrix ![]() , we may finally write

, we may finally write

![]()

By carefully sequentially placing all values for ![]() into the matrix

into the matrix ![]() we can compute the whole local stiffness matrix in one go by

we can compute the whole local stiffness matrix in one go by

![]()

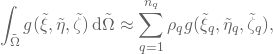

The integrals is approximated with quadrature rules. If we want to approximate the integral

![]()

the approximation by Gaussian quadrature is given by

where ![]() are the number of integration points, and

are the number of integration points, and ![]() and

and ![]() are given quadrature points and weights, respectively.

are given quadrature points and weights, respectively.

A somewhat similar procedure as shown above is needed to approximate the surface integral in \eqref{Eq:loading}. The full expression will depend on which part of the surface we integrate over. As an example, consider integrating over a surface where ![]() . As for the local stiffness matrix, we can compute the corresponding contribution to the load vector in one go as follows

. As for the local stiffness matrix, we can compute the corresponding contribution to the load vector in one go as follows

![]()

where

![]()

![]()

![]()

and

Here ![]() contains the

contains the ![]() and

and ![]() component of the control point

component of the control point ![]() . The integral may now be approximated by quadrature rules in two dimensions.

. The integral may now be approximated by quadrature rules in two dimensions.

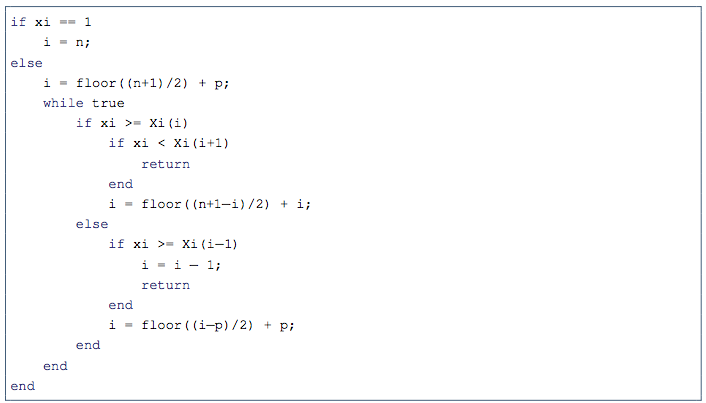

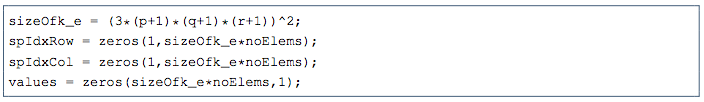

It is very important to note that the global stiffness matrix is very sparse. Not only is it a huge advantage in MATLAB to have the matrix in sparse format when solving the linear system, but also when assembling the matrix. If the matrix is made sparse only after assembly, then the initialization would require MATLAB to allocated place for a full stiffness matrix. This is very memory consuming and should be avoided if one wants to run the program with many degrees of freedom. A matrix in sparse format contains 3 columns; the first two represent the indices in the matrix and the third column represent the corresponding value. A way to do this is to first construct these three columns in three arrays. By noting that each element stiffness matrix has ![]() number of components, we initialize the arrays by the following.

number of components, we initialize the arrays by the following.

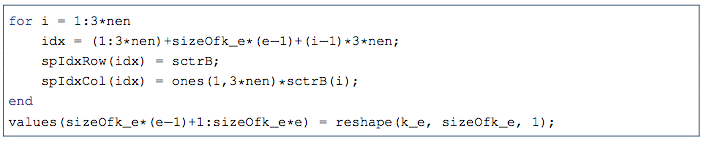

For each element, the element stiffness matrix is then added in the following way.

Note that we do not here sum overlapping element matrices, but this is automatically done when the sparse function is called in MATLAB.

![]()

That is, there will be index combinations which will repeat and therefore this method would require a lot more memory. The other obvious method is simply using

![]()

which typically results in the MATLAB warning “This sparse indexing expression is likely to be slow”. Experimentally we observe that the other method is 5 times as fast.

Post-processing

The visualizations is done in Paraview. Typically we create so called .vts files directly from MATLAB, and let Paraview do the illustrations from here.

We print the nodes, the displacement and every component of the stress for each node into such files. In addition we callculate the von Mises stress given by

![]()

which we shall use throughout the report in the visualizations.

One must make a grid in the classical FEM style in order to visualize the result. The mesh is simply created by finding the physical coordinates for each physical element. Moreover, for each corner of an element in the parametric space, the displacement and the components of stress can be calculated. Using the guide in “File formats for vtk version 4.2”, the file writing then follows by looping through each element and write data from each of the 8 corners each time.

MATLAB file structure

Hover the boxes to see subroutines and functions used. Click on a box to be directed to the source file.

Error analysis

It is always important to present some numerical evidence that the implementation is correct. This is typically done by finding an analytic solution and analyze the convergence of the numerical solution towards this analytic solution. Let the minimal order of the NURBS basis be given by

![]()

and let the maximal diameter of the elements (in the parametric space) be noted by ![]() . In the error analysis we shall start by the simplest open knot vectors. That is, for

. In the error analysis we shall start by the simplest open knot vectors. That is, for ![]() we have

we have ![]() , such that

, such that ![]() . In three dimensions we then have

. In three dimensions we then have ![]() . Generally we have

. Generally we have

![]()

where we assume that the polynomial order is equal in all directions and we refine uniformly. The energy norm is defined by

![]()

where we compute the bilinear form by

![]()

![]()

![Rendered by QuickLaTeX.com \begin{equation*} =\sum_{e=1}^{n_{el}}\int_{\Omega^e}\left[\boldsymbol\varepsilon(\vec{u}) - \boldsymbol\varepsilon(\vec{u}^h)\right]^\transpose \vec{C}\left[\boldsymbol\varepsilon(\vec{u}) - \boldsymbol\varepsilon(\vec{u}^h)\right] \idiff\Omega, \end{equation*}](http://org.ntnu.no/skimodeling/wp-content/ql-cache/quicklatex.com-62c2258ba43d8f20d4cf4be492f82a3b_l3.png)

using the same technique with transformation to the parent element for integration with quadratures. Thus, we need to compute ![]() in order to do the error analysis.

in order to do the error analysis.

In the article “Isogeometric analysis using polynomial splines over hierarchical t-meshes for two-dimensional elastic solids” we find the following estimate

![]()

where a quasi-uniform mesh refinement has been assumed. The constant ![]() is among other things dependent of the transformation from the parametric space to the physical space. It is not however depending on

is among other things dependent of the transformation from the parametric space to the physical space. It is not however depending on ![]() . So as

. So as ![]() become smaller, we expect the convergence to be of order

become smaller, we expect the convergence to be of order ![]() . Of course, the analytic solution (and then also the norm) should be independent of

. Of course, the analytic solution (and then also the norm) should be independent of ![]() , so we shall divide by

, so we shall divide by ![]() to normalize the error.

to normalize the error.

We want to do an error analysis with an analytic solution and a geometry which is to some extent similar to what we shall use when analyzing the flex of skis. Analytic solution (with corresponding data) on complex domains is in general hard to find. We shall restrict our self to a domain given by

![]()

where ![]() ,

, ![]() and

and ![]() is the width in

is the width in ![]() ,

, ![]() and

and ![]() direction of the beam, respectively. In the following we shall present several analytic solutions with corresponding illustrative plots and convergence plots. In the first two cases we choose these three parameters, such that the domain looks like a plank in a way that

direction of the beam, respectively. In the following we shall present several analytic solutions with corresponding illustrative plots and convergence plots. In the first two cases we choose these three parameters, such that the domain looks like a plank in a way that ![]() . In all test cases we want to construct solution which have homogeneous Dirichlet boundary conditions at

. In all test cases we want to construct solution which have homogeneous Dirichlet boundary conditions at ![]() . The boundary conditions corresponding to each solution and the analytic expression for

. The boundary conditions corresponding to each solution and the analytic expression for ![]() can be found in Maple files.

can be found in Maple files.

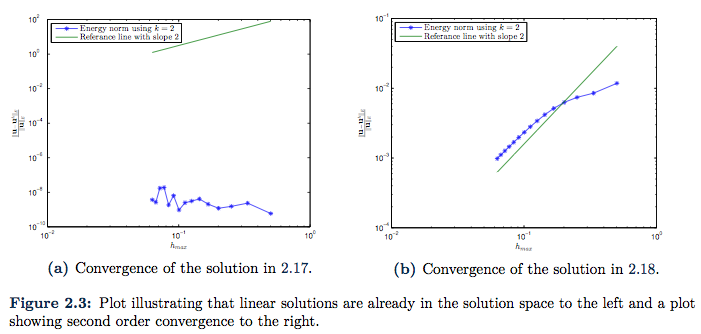

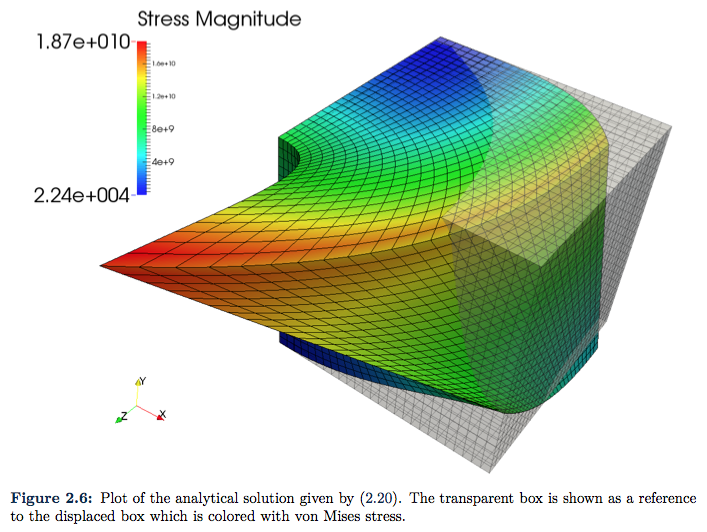

One of the simplest example that comes to mind is the solution

(15)

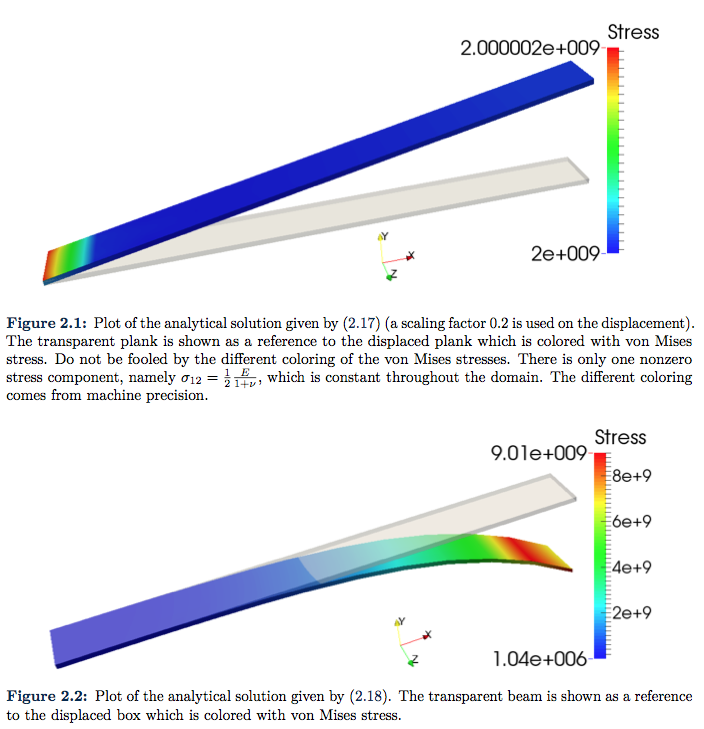

which is plotted in Figure 2.1. Of course, such linear solution is already in the search space ![]() , so we should expect the error to be near machine epsilon. And as we can see from the convergence plot given in Figure 2.3a this is indeed that case. Here the error is more likely to originate from the approximation in quadrature rules.

, so we should expect the error to be near machine epsilon. And as we can see from the convergence plot given in Figure 2.3a this is indeed that case. Here the error is more likely to originate from the approximation in quadrature rules.

A more complex solution is given by

(16)

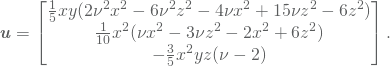

The plot of the solution is shown in Figure 2.2. The analysis is done with second order NURBS, so since the analytic solution has a third order polynomial in the ![]() direction, the solution is not in the search space

direction, the solution is not in the search space ![]() . The convergence plot is presented in Figure 2.3b. The order of convergence is not impressive in the beginning, but it get’s closer to the expected second order of convergence as the element size get’s smaller. This is probably due to the constant

. The convergence plot is presented in Figure 2.3b. The order of convergence is not impressive in the beginning, but it get’s closer to the expected second order of convergence as the element size get’s smaller. This is probably due to the constant ![]() , which we shall present evidence for in the next case.

, which we shall present evidence for in the next case.

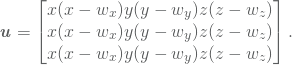

A more regular domain would be to choose ![]() , i.e. a cube. All though we have not presented so much theory on the case

, i.e. a cube. All though we have not presented so much theory on the case ![]() we shall present this case on the remaining three cases. For simplicity we construct the solution such that they are zero on the boundary of the cube. That is, we only have homogeneous Dirichlet boundary. Consider the solution

we shall present this case on the remaining three cases. For simplicity we construct the solution such that they are zero on the boundary of the cube. That is, we only have homogeneous Dirichlet boundary. Consider the solution

(17)

It does indeed satisfy the conditions with a rather ugly resulting function ![]() (the expression for this function is in fact so complex that we do not even bother to include it in the appendix). Once again, since this solution only has degree two for each polynomial in each of the spatial direction, the solution is thus an element in the search space

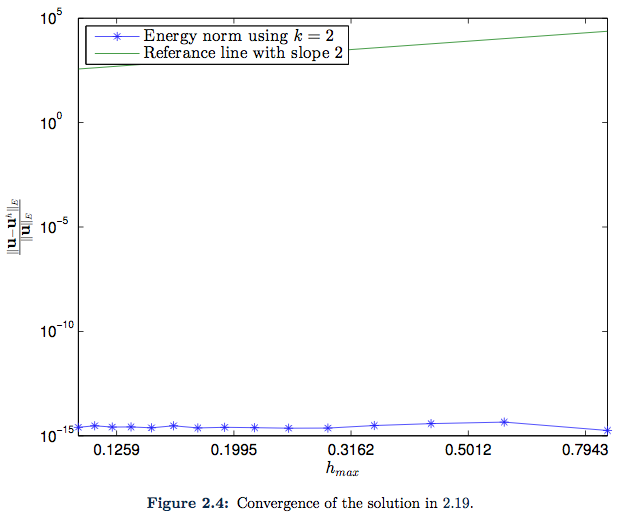

(the expression for this function is in fact so complex that we do not even bother to include it in the appendix). Once again, since this solution only has degree two for each polynomial in each of the spatial direction, the solution is thus an element in the search space ![]() , so we should expect the error to be close to machine epsilon again. As we can see from Figure 2.4 this is indeed the case.

, so we should expect the error to be close to machine epsilon again. As we can see from Figure 2.4 this is indeed the case.

By multiplying the solution in 17 by another factor ![]() ,

,

(18)

we get a solution which should not be in the search space ![]() . The condition on the boundary does of course still hold, but now we have an even more complex function

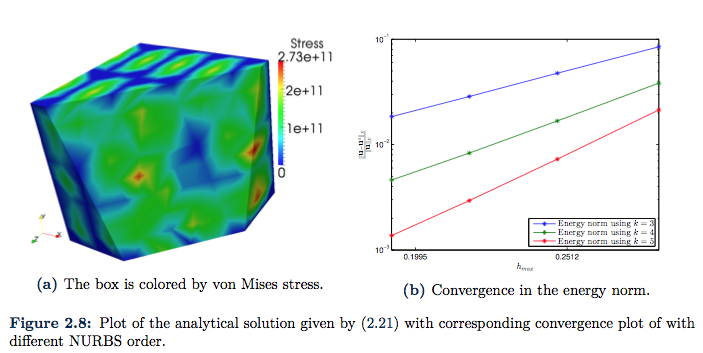

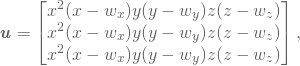

. The condition on the boundary does of course still hold, but now we have an even more complex function ![]() . The displacement of the cube is not so illustrative, rather, we cut of a part of the cube such that we can see the von Mises stress at a plane inside the cube as well. This plot is given in Figure 2.5a. The convergence plot is given in Figure 2.5b, and we here observe instantaneously convergence of second order.

. The displacement of the cube is not so illustrative, rather, we cut of a part of the cube such that we can see the von Mises stress at a plane inside the cube as well. This plot is given in Figure 2.5a. The convergence plot is given in Figure 2.5b, and we here observe instantaneously convergence of second order.

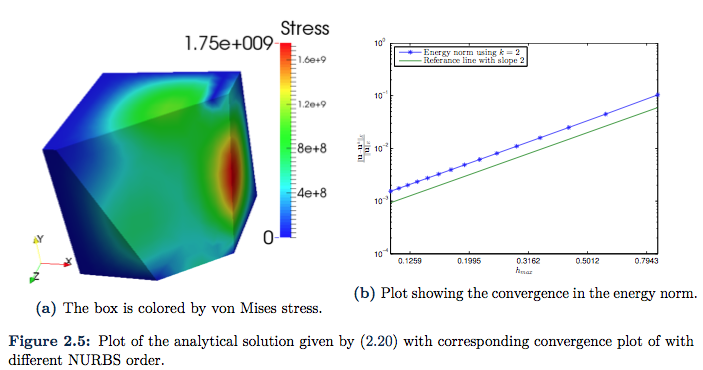

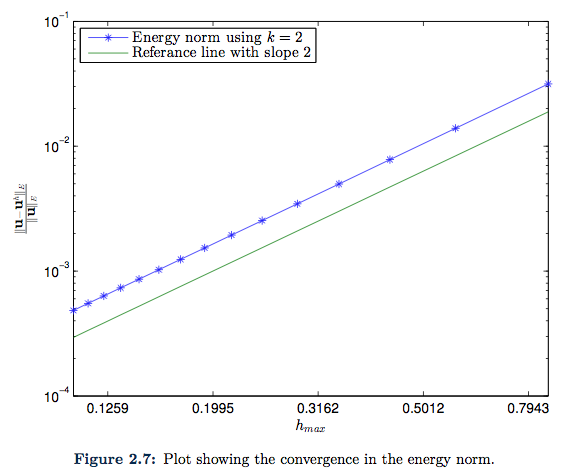

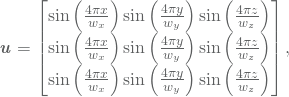

The finale example is presented just to illustrate that we have the same type of convergence when Neumann boundary condition is implemented. We plot the same analytic function in \eqref{Eq:secondAnalyticalSolution}, but here we choose ![]() . The resulting plots are given in Figure 2.6 and Figure 2.7.

. The resulting plots are given in Figure 2.6 and Figure 2.7.

All of the discussed solutions have been polynomial solution, and whould thus potentially be in the solution space ![]() if we elevate the order of the NURBS. Thus, the solutions presented so far does not fit so much for analysis on the order elevation of NURBS. A trigonometric solution given by

if we elevate the order of the NURBS. Thus, the solutions presented so far does not fit so much for analysis on the order elevation of NURBS. A trigonometric solution given by

(19)

will also satisfy homogeneous Dirichlet boundary conditions at the boundary. The resulting plots are given in Figure 2.8a and Figure 2.8b. In the convergence plot, we illustrate the different convergence rates by eleviating the NURBS order. It should be noted that the convergence is slightly better than ![]() .

.